GRAM-HD: 3D-Consistent Image Generation at High Resolution with Generative Radiance Manifolds

ICCV 2023

- Jianfeng Xiang 1,2

- Jiaolong Yang 2

- Yu Deng 1,2

- Xin Tong 2

- 1 Tsinghua Unviersity

- 2 Microsoft Research Asia

Abstract

Recent works have shown that 3D-aware GANs trained on unstructured single image collections can generate multiview images of novel instances. The key underpinnings to achieve this are a 3D radiance field generator and a volume rendering process. However, existing methods either cannot generate high-resolution images (e.g., up to 256X256) due to the high computation cost of neural volume rendering, or rely on 2D CNNs for image-space upsampling which jeopardizes the 3D consistency across different views. This paper proposes a novel 3D-aware GAN that can generate high resolution images (up to 1024X1024) while keeping strict 3D consistency as in volume rendering. Our motivation is to achieve super-resolution directly in the 3D space to preserve 3D consistency. We avoid the otherwise prohibitively-expensive computation cost by applying 2D convolutions on a set of 2D radiance manifolds defined in the recent generative radiance manifold (GRAM) approach, and apply dedicated loss functions for effective GAN training at high resolution. Experiments on FFHQ and AFHQv2 datasets show that our method can produce high-quality 3D-consistent results that significantly outperform existing methods.

Video

Overview

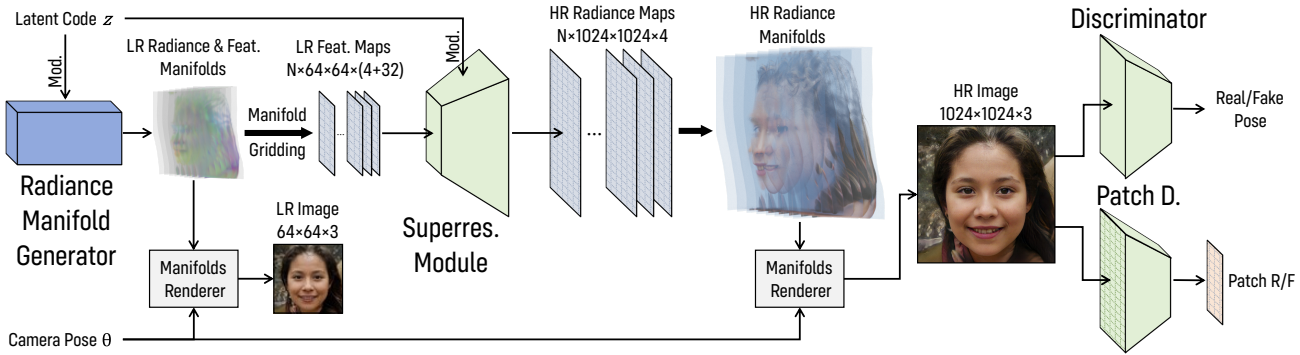

The overall framework of our GRAM-HD method. The generator consists of two components: the radiance manifold generator and the manifold super-resolution module. The former generates radiance and feature manifolds that represent an LR 3D scene. Through manifold gridding, the manifolds are sampled to discrete 2D feature maps. The super-resolution module then processes these feature maps and output HR radiance maps. Finally, an HR image can rendered by computing ray-manifold intersections and integrating their radiance sampled from the HR radiance maps.

Generation Results

GRAM-HD can generate high-quality images at high resolution with rich details. Moreover, it allows explicit manipulation of camera pose while maintains strong 3D consistency across different views. For some thin structures like human hair, glasses, and whiskers of cats, our method produces realistic fine details and correct parallax effect viewed from different angles.

GRAM-HD have comparable quality to other method that adopts 2D CNN for image-space upsampling but with remarkable better 3D consistency, which is demonstrated by the following Epipolar Line Images.

Citation

@InProceedings{xiang2023gramhd, title = {GRAM-HD: 3D-Consistent Image Generation at High Resolution with Generative Radiance Manifolds}, author = {Xiang, Jianfeng and Yang, Jiaolong and Deng, Yu and Tong, Xin}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, month = {October}, year = {2023}, pages = {2195-2205} }